That’s billions with a “b”:

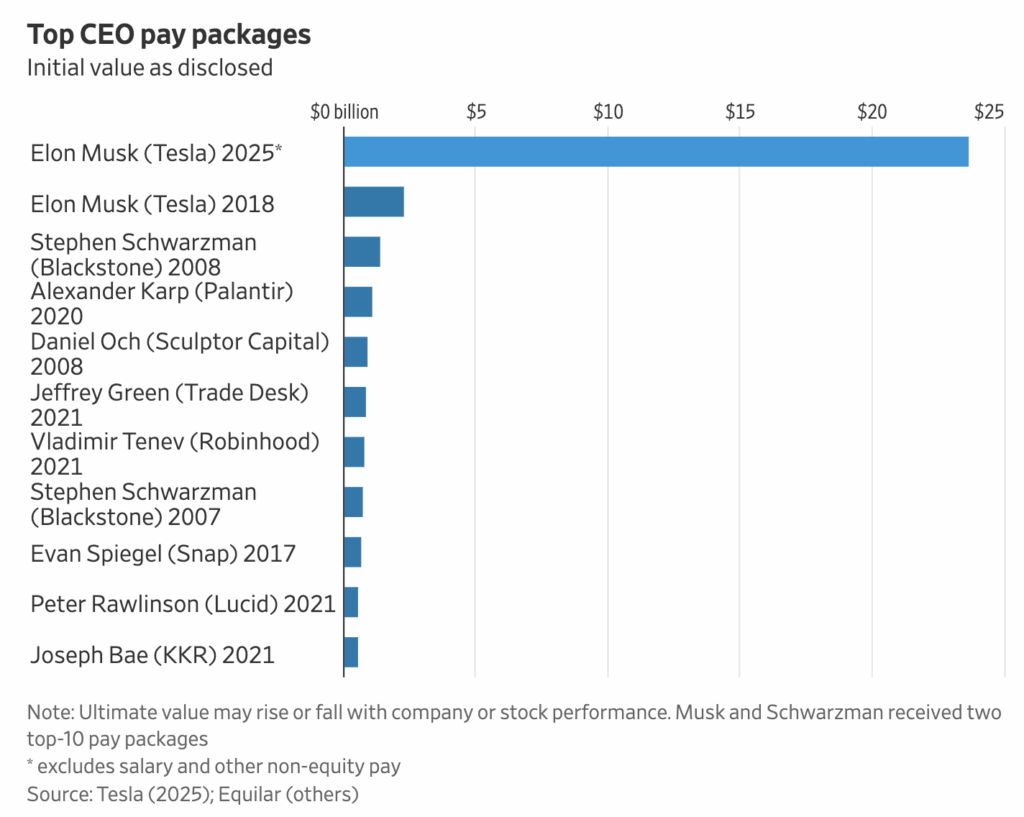

Tesla took a “first step” to keep its leader Elon Musk focused on the struggling electric-vehicle maker, awarding the world’s richest man one of the biggest-ever stock awards to stick around for at least two years.

Tesla’s board approved a stock award for Musk that it tentatively valued at $23.7 billion, which he can claim in two years unless a court rescues his prior, larger stock-option grant. Musk has run Tesla without a pay package since his $50 billion option award was tossed by a court in 2024.

The electric-vehicle maker said its “interim award” of 96 million shares will vest as long as Musk remains on the job as chief executive or under another executive title heading product development or operations, according to a securities filing. It described the award as a “first step, good faith payment” to keep the world’s richest man engaged.

Tesla’s business has been in a funk this year amid falling vehicle sales as investors fret about how much time Musk is spending on other pursuits. After stepping back from his work as a White House adviser in late May, he has overseen the launch of Tesla’s robotaxi service. But he also has been rounding up billions in funding for money-losing xAI while SpaceX overcame a Starship rocket explosion in June to successfully send four astronauts to the International Space Station.

To summarize Republican economic ideology, ordinary workers will get too lazy to work if they are provided access to basic healthcare, while already world-historically compensated CEOs cannot reasonably be expected to do their basic jobs without being paid enough money the Dallas Cowboys and the Los Angeles Dodgers and have plenty to spare to pick up a few private islands.

The post CEO receives $24 billion dollar gift to incentivize him doing the job of Chief Executive Officer going forward appeared first on Lawyers, Guns & Money.

Read more of this story at Slashdot.

I’m not the kind of person to rush to get the newest Iphone even if my plan makes me eligible for a cheap upgrade, but I would make an exception for this:

Apple’s iOS 26 will allow users to filter out texts from unknown senders.

That could be a big financial problem for campaigns that rely on texts for donations.

Apple’s new spam text filtering feature could end up being a multimillion-dollar headache for political campaigns.

iOS 26 includes a new feature that allows users to filter text messages from unrecognized numbers into an “Unknown Senders” folder without sending a notification. Users can then go to that filter and hit “Mark as Known” or delete the message.

In a memo seen by BI and first reported by Punchbowl News, the official campaign committee in charge of electing GOP senators warned that the new feature could lead to a steep drop in revenue.

“That change has profound implications for our ability to fundraise, mobilize voters, and run digital campaigns,” reads a July 24 memo from the National Republican Senatorial Committee, or NRSC.

That’s what you call a win-win!

Of course, this will impact the fundraising of both parties if it works. A lot of people will tell you that it’s incredibly annoying but necessary because it works. At least on the Democratic side, though, it “works” only for the spammers themselves:

The digital deluge is a familiar annoyance for anyone on a Democratic fundraising list. It’s a relentless cacophony of bizarre texts and emails, each one more urgent than the last, promising that your immediate $15 donation is the only thing standing between democracy and the abyss.

The main rationale offered for this fundraising frenzy is that it’s a necessary evil—that the tactics, while unpleasant, are brutally effective at raising the money needed to win. But an analysis of the official FEC filings tells a very different story. The fundraising model is not a brutally effective tool for the party; it is a financial vortex that consumes the vast majority of every dollar it raises.

We all have that one obscure skill we’ve inadvertently maxed out. Mine happens to be navigating the labyrinth of campaign finance data. So, after documenting the spam tactics in a previous article, I told myself I’d just take a quick look to see who was behind them and where the money was going.

That “quick look” immediately pulled me in. The illusion of a sprawling grassroots movement, with its dozens of different PAC names, quickly gave way to a much simpler and more alarming reality. It only required pulling on a single thread—tracing who a few of the most aggressive PACs were paying—to watch their entire manufactured world unravel. What emerged was not a diverse network of activists, but a concentrated ecosystem built to serve the firm at its center: Mothership Strategies.

[…]

After subtracting these massive operational costs—the payments to Mothership, the fees for texting services, the cost of digital ads and list rentals—the final sum delivered to candidates and committees is vanishingly small. My analysis of the network’s FEC disbursements reveals that, at most, $11 million of the $678 million raised from individuals has made its way to candidates, campaigns, or the national party committees.

But here’s the number that should end all debate:

This represents a fundraising efficiency rate of just 1.6 percent.

Here’s what that number means: for every dollar a grandmother in Iowa donates believing she’s saving democracy, 98 cents goes to consultants and operational costs. Just pennies reach actual campaigns.

Of course, the party can and should just stop doing fundraising that alienates countless voters while not actually raising any more without any technological change.

The post An upgrade you can use appeared first on Lawyers, Guns & Money.

As satisfying as a successful real-time strategy game campaign can be, dealing with a complex RTS map can often be overwhelming. Keeping track of multiple far-flung resource-production bases, groups of units, upgrade trees, and surprise encounters with the enemy requires a level of attention-splitting that can strain even the best multitaskers.

Then there's The King Is Watching, which condenses the standard real-time strategy production loop into an easy-to-understand single-screen interface, complete with automatic battles. The game's unique resource management system—combined with well-designed, self-balancing difficulty and randomized upgrades that keep each run fresh—makes The King Is Watching one of the most enjoyable pick-up-and-play strategy titles I've encountered in a long time.

What is the king watching, exactly?

The bulk of the action in The King Is Watching takes place on a small, 5x5 grid of squares representing your castle. That's where you'll place blueprint tiles from your hand that then start producing the basic and refined resources necessary to place even more blueprints. Those resources also power the factories that crank out defensive units that automatically fight to protect your castle from periodic waves of enemies (in adorable pixelated animations that take place on a battlefield to the right of your castle).

A lot of good resource tiles there, but the king can only look at five squares at a time here.

Credit:

Hypnohead / Steam

A lot of good resource tiles there, but the king can only look at five squares at a time here.

Credit:

Hypnohead / Steam

Sounds simple enough. But the entire castle can't be actively productive at once. Instead, only a few contiguous tiles within the area of the "king's gaze" can make resources and/or units at any one time.

That means you have to constantly and actively drag and rotate your "gaze" around your castle, prioritizing the resources and units you need immediately and leaving others that can wait for the king's attention. You have to be careful with the relative positioning of your blueprints so that units and resources that complement each other can be produced in the same "gaze" without a lot of unproductive downtime (say, when you've hit your unit limit) or resource droughts.

You might start off with one corner of the grid devoted to basic resources and another to basic unit production, shifting your gaze quickly between the two as your unit numbers and/or resource stockpiles dwindle. Later, you might add a market to generate the gold you'll need for the squares that produce stronger units or a crystal mine that will help supply powerful magical units you create later in the run.

If you can upgrade all the way to a Tesla tower (seen here), you're probably in OK shape...

Credit:

Hypnohead / Steam

If you can upgrade all the way to a Tesla tower (seen here), you're probably in OK shape...

Credit:

Hypnohead / Steam

You might also invest your treasure in upgrades that make certain tiles produce more quickly or on global upgrades that expand your gaze or let you station more troops on the field at once. And as your grid fills up, you must figure out which underpowered or less-useful tiles to destroy to make space for the more powerful blueprints you've hopefully earned or generated to replace them.

Try to keep up

Shifting your gaze and adjusting your production tiles plays out like a simple puzzle game, requiring effective juggling of both time and space to be maximally efficient. You need to constantly split your attention between your present resource and unit needs and your near- and far-future plans for upgrades. As frantic as this can get, it never feels unmanageable, thanks in large part to an excellent user interface that packs practically everything you need to know onto a single screen, no camera-fiddling or complex keyboard shortcuts required.

The King Is Watching also makes use of an ingenious kind of partially self-selecting difficulty system, where you periodically pick from a randomized set of "prophecies" to "predict" how many and which enemies will come in the next few waves (and which potential upgrades they might give you when they're gone). This leads to a delightfully fraught balance of risk and reward, forcing you to ride the edge of survivability in each wave. Choose too many enemies and you could be overwhelmed before your defensive units are built up. Choose too few and you won't earn the requisite upgrades needed to work through the overpowered boss waves later.

The kinds of randomized options you'll have to choose between waves.

The kinds of randomized options you'll have to choose between waves.

Those inter-wave upgrades are also randomized in each run, adding some roguelike unpredictability that means no two play sessions develop quite the same way. You have to be flexible, adapting to the blueprints and units you're given, while being willing to abandon plans that are no longer feasible.

Between waves, you'll often get the opportunity to buy emergency resource drops, useful upgrades that last through the whole run, or one-time spells that can strengthen your units or hinder the opposition. Figuring out the best potential upgrade paths requires a lot of trial and error, and you'll need a little luck in drawing some of the more powerful upgrade options. While experience and skill can make things more manageable, some runs end up a lot more winnable than others.

Playing through successful waves also earns you tokens you can spend between runs on permanent upgrades (including crucial expansions of that 4x4 grid) and new selectable kings with their own unique gaze shapes and special powers. But even as these upgrades make it easier to succeed in successive runs, the game cranks up the "threat level" in turn to raise the enemy strength level (and the stakes) accordingly.

That kind of self-balancing means The King Is Watching always manages to feel engaging without coming off as totally unfair. And individual runs are zippy enough to not wear out their welcome; you can make it through a full run of two or three bosses in about 30 minutes or so, especially if you use the "fast forward" option to speed up the routine resource production between enemy waves.

Best of all, those 30 minutes are so dense with important decisions and split-second management of your kingly gaze that you never have time to feel bored. The King Is Watching perfectly rides the fine line between engrossing and overwhelming, making it perfect for quick, lunch-break-sized brain breaks that combine positional reasoning, reflexes, and strategic planning.

Despite the protests of millions of Americans, the Corporation for Public Broadcasting (CPB) announced it will be winding down its operations after the White House deemed NPR and PBS a "grift" and pushed for a Senate vote that eliminated its entire budget.

The vote rescinded $1.1 billion that Congress had allocated to CPB to fund public broadcasting for fiscal years 2026 and 2027. In a press release, CPB explained that the cuts "excluded funding for CPB for the first time in more than five decades." CPB president and CEO Patricia Harrison said the corporation had no choice but to prepare to shut down.

"Despite the extraordinary efforts of millions of Americans who called, wrote, and petitioned Congress to preserve federal funding for CPB, we now face the difficult reality of closing our operations," Harrison said.

Concerned Americans also rushed to donate to NPR and PBS stations to confront the funding cuts, The New York Times reported. But those donations, estimated at around $20 million, ultimately amounted to too little, too late to cover the funding that CPB lost.

As CPB takes steps to close, it expects that "the majority of staff positions will conclude with the close of the fiscal year on September 30, 2025." After that, a "small transition team" will "ensure a responsible and orderly closeout of operations" by January 2026. That team "will focus on compliance, final distributions, and resolution of long-term financial obligations, including ensuring continuity for music rights and royalties that remain essential to the public media system."

"CPB remains committed to fulfilling its fiduciary responsibilities and supporting our partners through this transition with transparency and care," Harrison said.

NPR mourns loss of CPB

In a statement, NPR's president and CEO, Katherine Maher, mourned the loss of CPB, warning that it was a "vital source of funding for local stations, a champion of educational and cultural programming, and a bulwark for independent journalism."

The agency's shuttering won't just impact public media during Trump's term, Maher suggested, but will cause "ripple effects" across "every public media organization and, more importantly, in every community across the country that relies on public broadcasting."

"We're grateful to CPB staff for their many years of service to public media," Maher said.

Trump's attacks on CPB also included suing CPB directors who refused to be fired and are part of a broader campaign to eliminate what he views as anti-conservative bias in media. Most recently, Trump's Federal Communications Commission appointed a bias monitor to babysit CBS's programming. Critics fear that could make it easier for Trump to censor CBS News and "silence dissidence." These fights to control the news come at a time when access to local news is shrinking and having an "insidious effect on" democracy, the American Journalism Project has warned.

Vowing to help support local stations to fill the gap and keep the public informed, Maher noted that the closure of CPB also "represents the loss of a major institution and decades of knowledge and expertise" that has guided how the US fosters media as a public service for 60 years.

"As an independent, nonprofit news organization, NPR remains resolute in our pursuit of our mission: to create an informed and inspired public in partnership with our Member stations," Maher said. "We will continue to respond to this crisis by stepping up to support locally owned, nonprofit public radio stations and local journalism across the country, working to maintain public media's promise of universal service, and upholding the highest standards for independent journalism and cultural programming in service of our nation."

Harrison thanked partners for helping to keep CPB's mission alive.

"Public media has been one of the most trusted institutions in American life, providing educational opportunity, emergency alerts, civil discourse, and cultural connection to every corner of the country,” Harrison said. “We are deeply grateful to our partners across the system for their resilience, leadership, and unwavering dedication to serving the American people."